The 80% AI-Coding Stunt: Clever, Probably True — But Not That Relevant

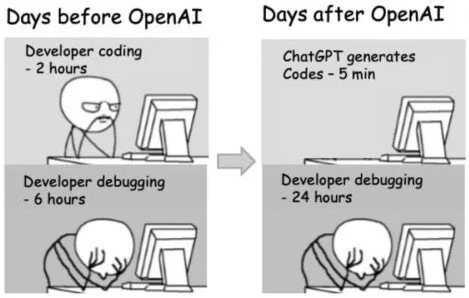

AI may already write most of the code at Meta and Anthropic. But productivity isn’t measured in LOC (Lines of Code): the real cost lies in process, quality, and long-term maintainability.

Fact: 100% of Agent Code Is Written by AI

Anthropic and Meta have (in)famously stated on several occasions that a large share of their code is now written by AI agents. Meta claimed in May that 30% of its code was generated this way, with expectations of hitting 50% by 2026. Meanwhile, Anthropic’s CEO, Dario Amodei, projected in March 80–90% within months. A figure recently “officialized” at 80% by Boris Cherny, head of Claude Code, on the Latent Space podcast. These statements (a bit truncated, let’s be fair to Boris, who did quickly add: “humans still did the directing and definitely reviewed the code”) triggered reactions ranging from outrage to extreme skepticism across the SWE community.

Blame is to Blame

Call me a fool or part of a cult, but personally, I’m inclined to believe the claims aren’t total BS, and may not even be exaggerated.

First of all, we’d be delusional to think their engineers use the same version of Claude Code or Codex as we do. They almost certainly work with a different class of firepower—unleashed, experimental models, likely backed by architectures fully optimized for performance and accuracy—far beyond what we get for a $200 monthly subscription. It’s like imagining Mercedes dropping a Formula One engine into an $80k consumer car.

But more importantly, the number itself isn’t that big once you step back and look at the bigger picture. If you use GitHub Blame (a command attributing each line of code to someone, and now, something), the 80% makes perfect sense. Like any others, these companies produce code not only for core, vital, and sensitive features, but also for documentation, landing pages, trivial frontends, prototypes, and other stakeless bits of code you could think of.

Natalie Breuer at LinearB (a productivity platform for engineers) argues in a lengthy critique of LOC metrics (“an outdated approach [that] creates misaligned incentives, rewards inefficiency”) that the portion of code that truly matters—logic, problem-solving, reusable functions—is far from the bulk of any codebase. She doesn’t put a number on it, since measuring is tricky and depends heavily on what each company considers important, but Andriy Burkov (author of the series “The Hundred-Page […] Books”, see here) was not afraid to share on Linkedin his own ballpark estimate: “10% boilerplate, 40% reusing libraries and APIs, 20% reinventing the wheel (writing code that someone has already written), and 30% brand new business- or app-specific code.” It’s a reasonable split, to which I would add documentation (if within the codebase) and testing, both deserving, I believe, their own category.

Since the rise of LLMs, the act of coding has become increasingly marginal in a SWE’s routine. From my own experience working solo on a pet project (a full productivity and data suite for our VC fund) almost everything, significant or not, has been written by AI, at least according to GitHub Blame. Yet as important as the code is, what truly makes the service work are the countless hours of code review and step-by-step guidance. Code is merely the glue between you and your objectives.

This is why big techs are proceeding to sometimes massive layoffs on one side, while also recruiting brilliant minds on the other: because they need more brains, and fewer hands.

What Is Code, Anyway?

So if everyone agrees that LOC has never been a good proxy for productivity or quality—and probably now less than ever—, what is important and how to measure productivity?

Arnaud Porterie (founder of the late Echoes HQ) dedicated much of his career to evangelizing tech teams on this topic, chasing the key metrics that might make a difference. He admits he hasn’t found a definitive framework: too many moving parts exist in each company to generalize into a universal recipe. But he’s clear on one thing: LOC ain’t part of it. For Stan Girard from Quivr, the importance of measuring the entire process has become paramount. Sure, shipping fast is the obvious upside of AI-coding. But unless it’s a fire-and-forget service or a quick demo prototype, the real question is: what’s the overall cost of the code—and, obviously, the cost of legacy?

Gergely Orosz and Laura Tacho from The Pragmatic Engineer recently published a comprehensive analysis of AI’s impact: a goldmine of insights and recommendations for tracking smart, useful metrics before and after AI adoption. Interestingly enough, their survey of 18 tier-1 US companies highlights the lack of consensus on what actually matters, even though no genuinely new metric has emerged. PR throughput, deployment frequency, PR cycle time, lead time for changes—all remain the same. You could conclude that measuring an agent’s productivity is about as close as it gets to measuring a SWE made of flesh: it’s tough!

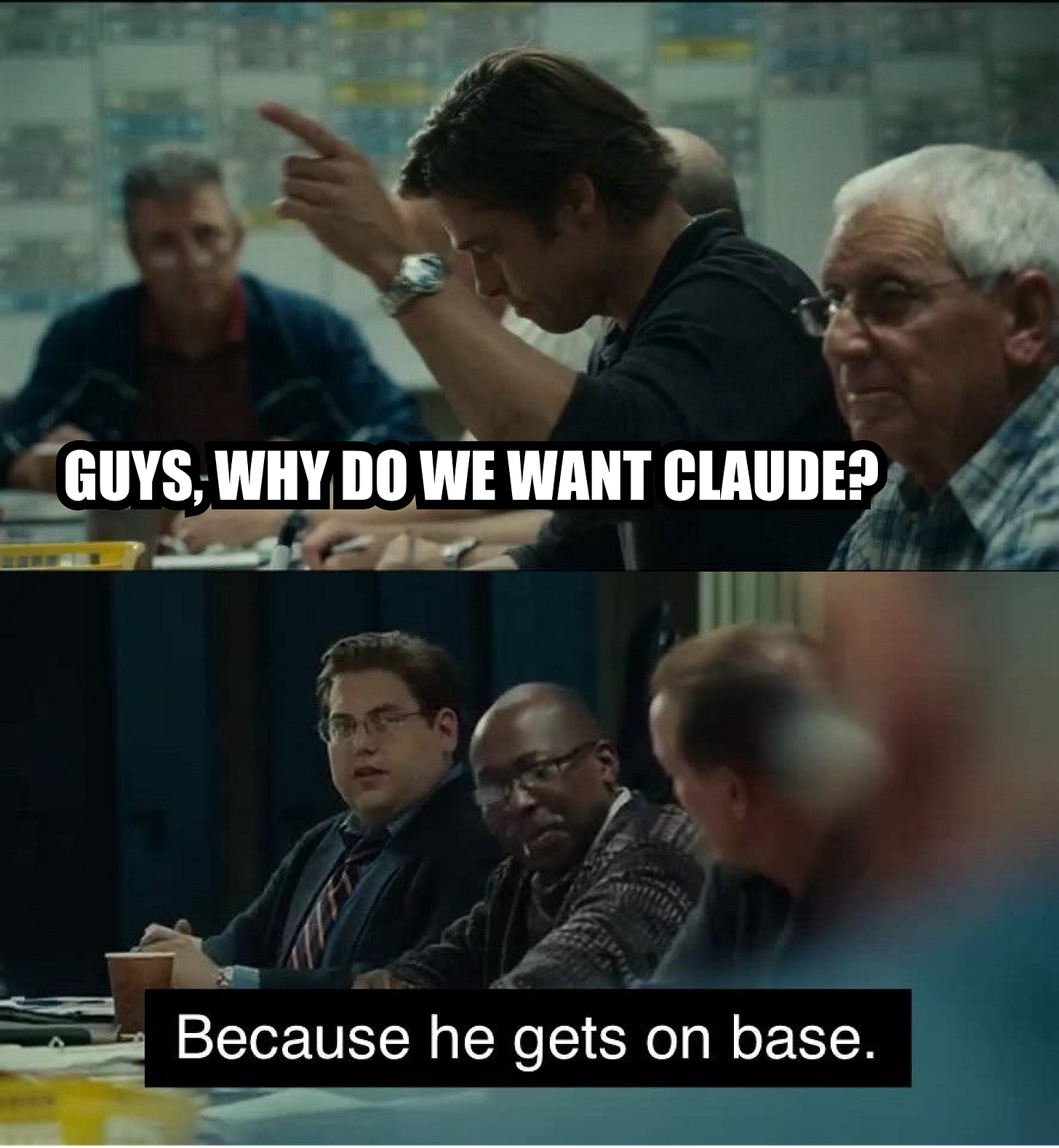

Ultimately, in Meta’s or Anthropic’s defense, even if they wanted to brag about their productivity from a more honest and substantial perspective, there’s still no Bill James game score for engineering. So yes, the percentage of AI-generated code may be deceiving and irrelevant—but it’s a pretty clever thing to say out loud. As we noted last week, SWE benchmarks are at best the least-worst way to measure accuracy or quality. Claiming you already rely significantly on your own models at scale—and given that these frontier labs aren’t exactly staffed with chumps—makes for a powerful punchline and a great sales pitch. Hats off 🎩.